The moment that many AI developers and enthusiasts have been waiting for has arrived: the ChatGPT API is now available.

Get ready to see nearly every app on the planet try and integrate ChatGPT into their own apps over the coming weeks and months. Whether or not that will turn them into a viral sensation like ChatGPT is yet to be seen, but for now...it's certainly exciting.

In this article, let's walk through how to get started with the ChatGPT API and look at a few key model details. Specifically, we'll be walking through OpenAI's ChatGPT API release article and the Chat Completions guide provided by OpenAI.

Note that in this release OpenAI also launched API access to their speech recognition model Whisper, although in this article we'll focus on the ChatGPT API.

Stay up to date with AI

Early uses of the ChatGPT API

Before we get into how to actually use the ChatGPT API, let's see how developers have already started to integrate the new model into their own apps.

As the docs highlight, a few use cases of the ChatGPT API include:

- Draftings emails or other written content

- Answer questions about a set of documents

- Create conversational agents

- Create tutors for a range of subjects

- Translate languages, writing code, and much more...

Here are the large companies that OpenAI highlights that received early access and have already integrated ChatGPT into their apps:

- SnapChat introduced My AI for Snapchat+, a customizable chatbot that provides recommendations based on ChatGPT API.

- Quizlet launched Q-Chat which is an AI tutor that provides students with relevant study materials through a ChatGPT-powered experience.

- Shopify's consumer app Shop uses the ChatGPT API to power its new AI-powered shopping assistant, which offers personalized shopping recommendations.

Aside from the early-access users, here are a couple interesting ones that were released within hours of ChatGPT's API release...WhatOnEarth is a search engine built on the new ChatGPT API.

Wow! #ChatGPT's API was just released 1 hour ago and @naklecha has already built a search engine app using it! 🤯👇 https://t.co/Z4hFRMzC2r

— DataChazGPT 🤯 (not a bot) (@DataChaz) March 1, 2023

Here's another example of a developer building a command line chatbot in just 16 lines of Python...

Built a command line chatbot in Python with the ChatGPT API. 16 lines of code. I'm not sure anyone's ever shipped a more powerful devtool than OpenAI. pic.twitter.com/NiStQpC4zV

— Greg Baugues (@greggyb) March 2, 2023

We'll do a post on more interesting applications of the ChatGPT API, but for now let's see how we can get started with it.

ChatGPT API: model details

The ChatGPT model can now be accessed via the API as gpt-3.5-turbo, which is the exact same model used in the viral web version of ChatGPT.

First things first, one of the slightly unexpected parts of the API release is how much cheaper it is than previous models...

Through a series of system-wide optimizations, we’ve achieved 90% cost reduction for ChatGPT since December; we’re now passing through those savings to API users.

Specifically, the ChatGPT API is priced at $0.002 per 1k tokens, which is 10x cheaper than existing GPT-3.5 models.

Another nice thing is that it's very easy to migrate from text-davinci-003 to gpt-3.5-turbo with just small adjustments to the Completions request.

One key difference between ChatGPT API and previous models is how they consume text. Specifically, previous versions of GPT-3 would consume unstructured text which is represented as a sequence of tokens.

In case you're unfamiliar with tokens...

Tokens can be thought of as pieces of words. Before the API processes the prompts, the input is broken down into tokens.

More specifically:

- 1 token ~= 4 chars in English

- 1,500 words ~= 2048 tokens

For example, the string “ChatGPT is great!” is encoded into six tokens: [“Chat”, “G”, “PT”, “ is”, “ great”, “!”].

The total number of tokens in an API call is important as it affects:

- How much your API call costs, as you pay per token

- How long your API call takes, as writing more tokens takes more time

- Whether your API call works at all, as total tokens must be below the model’s maximum limit (4096 tokens for

gpt-3.5-turbo-0301)

Getting started with the ChatGPT API

Now let's walk through OpenAI's ChatGPT documentation and see how we can interact with the new model endpoint.

We'll be using Google Colab for this, so first we need to !pip install openai into our notebook and set our OpenAI API key openai.api_key = "YOUR-API-KEY".

Here's an example of how to use the new ChatCompletion endpoint:

import openai

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Who won the world series in 2020?"},

{"role": "assistant", "content": "The Los Angeles Dodgers won the World Series in 2020."},

{"role": "user", "content": "Where was it played?"}

]

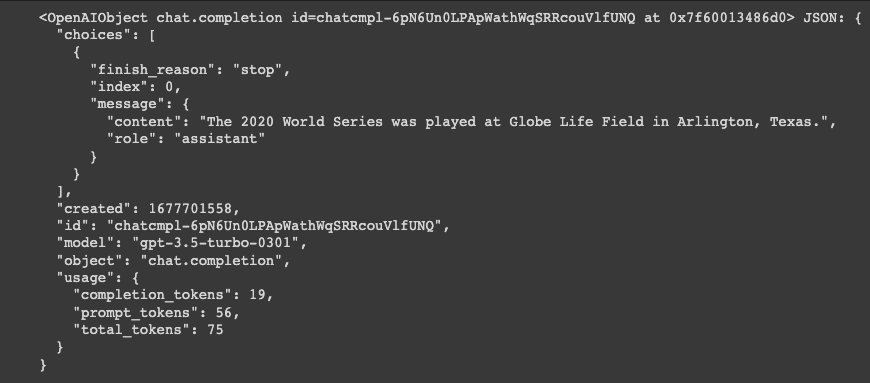

)Here's what the full response looks like:

We can access the actual content of the response as follows:

response['choices'][0]['message']['content']The 2020 World Series was played at Globe Life Field in Arlington, Texas.

Let's break this down.

One key difference between the ChatGPT API and previous GPT-3 models is that you can send an array of messages for the completions with different "roles" such as "system", "user" and "assistant".

The main input is the messages parameter. Messages must be an array of message objects, where each object has a role (either “system”, “user”, or “assistant”) and content (the content of the message). Conversations can be as short as 1 message or fill many pages.

Here's a breakdown of each role...

System

The "system" message sets the overall behavior of the assistant. In the example above, ChatGPT was instructed with “You are a helpful assistant.”

In other words, the system role is a gentle instruction, for example here is the one of the system messages OpenAI used for ChatGPT:

You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible. Knowledge cutoff: {knowledge_cutoff} Current date: {current_date}

User

Next, the user messages provide specific instructions for the assistant. This will mainly be used by end users an application, but can also be hard-coded by developers for specific use cases.

Assistant

The assistant message stores previous ChatGPT responses, or they can also be written by developers to provide give examples of the desired behavior (kind of like small-scale fine-tuning).

One big difference in the ChatGPT API is that it lets you send an array of messages for completions.

— Dan Shipper 📧 (@danshipper) March 1, 2023

This is incredibly useful! Especially since it looks like you can insert a 3rd party "system" role that could help guide the assistant in certain circumstances.

EXCITING TIMES! pic.twitter.com/Ummxr9Hwvs

One key point is that the chat conversation history needs to be provided in each ChatGPT API request.

In the above example, we need to pass all the previous user and assistant messages for the "Where was it played?" question to make sense. As OpenAI highlights:

Because the models have no memory of past requests, all relevant information must be supplied via the conversation. If a conversation cannot fit within the model’s token limit, it will need to be shortened in some way.

Another way to handle chat history is with the LangChain memory library, although we'll discuss that in more detail in an upcoming article.

Prompt engineering best practices for the ChatGPT API

Similar to previous models, there are several prompt engineering best practices that are worth noting when it comes to the ChatGPT API.

Specifically, they note that the "system" message that instructs ChatGPT to be a helpful assistant is not always paid attention to:

In general, gpt-3.5-turbo-0301 does not pay strong attention to the system message.

As mentioned, the system message is instead a gentle nudge in the direction you want the assistant to take, although more important instructions should be included in the "user" message.

If you're not getting the results you want, OpenAI suggests the following prompt engineering best practices:

- Be more explicit in your instructions

- Specify the exact format you want the answer in

- Ask the model to think step by step

- Ask the model to debate pros and cons before deciding on an answer

We'll be going over the topic of prompt engineering best practices in more details soon, although for now, you can check out the OpenAI guide on techniques to improve reliability.

ChatGPT vs. Completions

As mentioned, the ChatGPT model has similar capabilities to the text-davinci-003 model, although given it's 10x cheaper OpenAI recommends it for most use cases.

As we saw, the ChatGPT API request is very similar to a Completions request. For example, here's how you would convert a simple translation assisntant from a Completions API request to a ChatGPT API request:

GPT-3 Completions request

# Define the text you want to translate

text = "Hello, how are you?"

# Completions API request to generate the translation

response = openai.Completion.create(

engine="text-davinci-003",

prompt=f"Please translate the following English text to French: '{text}'",

max_tokens=60,

n=1,

stop=None,

temperature=0.7,

)

# Print the translated text

translation = response.choices[0].text.strip()

print(translation)ChatGPT API request

text = "Hello, how are you?"

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant that translates English to French."},

{"role": "user", "content": f"Translate the following English text to French: '{text}'"}

]

)

print(response['choices'][0]['message']['content'])Summary: ChatGPT API Release

As we've seen, OpenAI has made it very simple to migrate from the Completions API to the ChatGPT API.

With the added functionality of multiple "roles", the ChatGPT API makes it much easier to build conversation agents and assistants for more domain-specific use cases.

There's no question that it'll be exciting few months (and years) to see all the new applications that come from this release, as well as existing apps that integrate ChatGPT's functionality.

In the meantime, we'll continue releasing new ChatGPT programming and prompt engineering tutorials to help you make the most of this major release.

You can check out our video tutorial on getting started with the ChatGPT API below: