Welcome to This Week in AI, which provides a roundup of the important developments in AI, including research, tutorials, and news.

This week in AI we have stories about NVIDIA’s newest AI Chip, Anthropic launching its new language model, and Microsoft bringing AI and Web3 together with Aptos.

- Nvidia introduces its latest AI Chips that will lower the cost of LLMs

- Anthropic officially launches a new language model called Claude Instant

- Microsoft and Aptos team to bring AI to the world of Web3

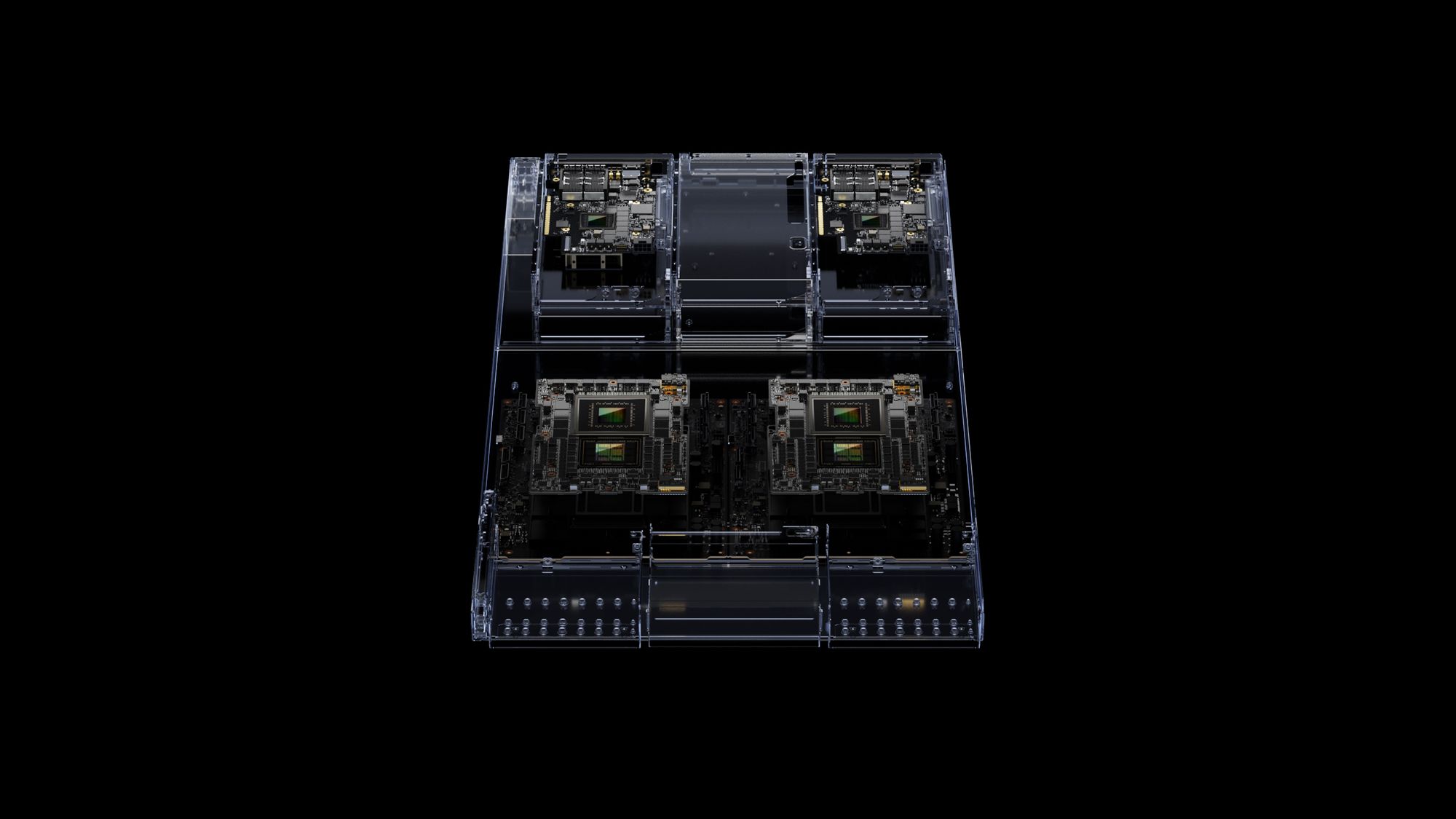

NVIDIA Launches Next-Gen AI Chip

The world’s largest AI chip maker NVIDIA just released its latest, next-generation AI chip technology. CEO Jensen Huang says it will drastically lower the cost of AI technology like running LLMs and help to scale out the world’s data center infrastructure at a much faster rate.

The GH200 is the latest AI chip from NVIDIA which is attempting to fend off heavy competition from companies like AMD, Alphabet, and Amazon. This new chip will utilize the same GPU as is used in NVIDIA’s highly coveted GH100 chip, but will also add 141 gigabytes of memory and a 72-core ARM processor.

The new GH200 Grace Hopper Superchip platform delivers this with exceptional memory technology and bandwidth to improve throughput, the ability to connect GPUs to aggregate performance without compromise, and a server design that can be easily deployed across the entire data center. - Jensen Huang

Huang says that the cutting-edge processors will be available by the second quarter of 2024 although no price tag has been provided yet. NVIDIA currently has about an 80% share of the AI chip market.

NVIDIA has pointed out that the “inference cost of large language models will drop significantly” from the introduction of the GH200 chip. Inference refers to a more advanced form of AI training that incorporates the ability to make logical decisions based on real-time information which can produce actionable results.

Learn more:

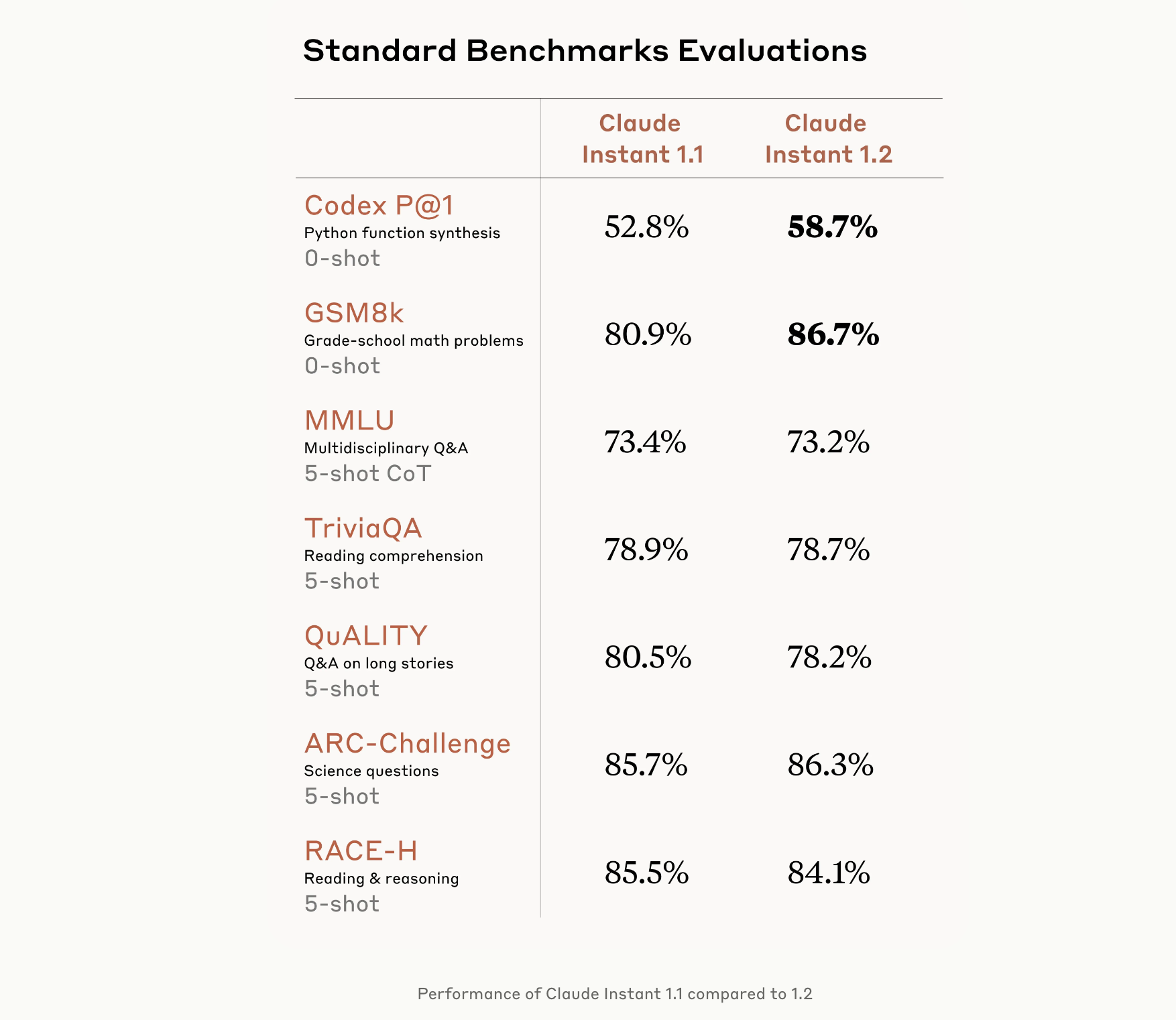

Anthropic Launches Claude Instant 1.2

- Anthropic released the latest version of its LLM called Claude Instant 1.2

- Claude 1.2 is an LLM that is available through their API

- The platform has impressive scores in coding and math and is both faster and cheaper than its flagship LLM Claude 2

Anthropic has officially launched the latest updated version of its language model, Claude. The faster and cheaper version will be released via their API that can be directly added to websites or other platforms. Anthropic is an AI startup that was founded by ex-OpenAI executives and recently raised $450M in funding from Google, among other investors.

Anthropic states Claude Instant 1.2 shows significant improvements in outputs of math, coding, and reasoning for users. Recent tests showed that Claude Instant 1.2 improved the accuracy of outputs for both math and coding by about 6.0% for both subjects.

The latest version provides much longer and more robust outputs and is said to do a much better job of following user instructions. It also has the same context window size as Claude 2 which is an impressive 100,000 tokens.

Learn more:

Microsoft and Aptos Team Up to Bring AI to Web3

Earlier this week, Microsoft announced a new partnership with the layer-1 blockchain company Aptos. The two will partner by using Aptos’ blockchain data to help train Microsoft’s AI models. Aptos will also be running its validator nodes through Microsoft’s Azure cloud network.

Aptos developers will have full access to Microsoft’s AI-supported tools when creating applications for the network. Microsoft and Aptos believe that together they can help to use AI to bring the power of Web3 to financial institutions.

Aptos might not receive the same attention as other layer 1 blockchains like Ethereum, but the network has some interesting technology. As of now, it can process up to 160,000 transactions per second (TPS) with a long-term goal of several hundred thousand by the end of 2023. Aptos is the 34th-largest cryptocurrency by market capitalization.

Mo Shaikh, the CEO of Aptos, explained that using blockchain technology can help keep AI in check. Shaikh went on to say that:

“everything we capture onchain is verified and that verification can help train these models in a way that you’re relying on credible information”.

Aptos’ blockchain has only been online since October, but the performance of the blockchain plus the credibility of Shaikh, who used to work with the Diem crypto project at Meta, were enough to make it an attractive partner for Microsoft.

Learn more:

That's it for this edition of This Week in AI, if you were forwarded this newsletter and would like to receive it you can sign up here.