OpenAI hosted their first developer day yesterday, and they certainly did not disappoint.

From the introduction of AI agents through the new Assistants API, multiple function calling, Vision, DALLE...and of course the introduction of GPTs, I'm still quite blown away at the speed of which OpenAI ships.

In this guide, we'll discuss the key highlights from OpenAI's DevDay, which you can watch the keynote below and find their blog post on product releases here.

TLDR; here are the top OpenAI DevDay announcements:

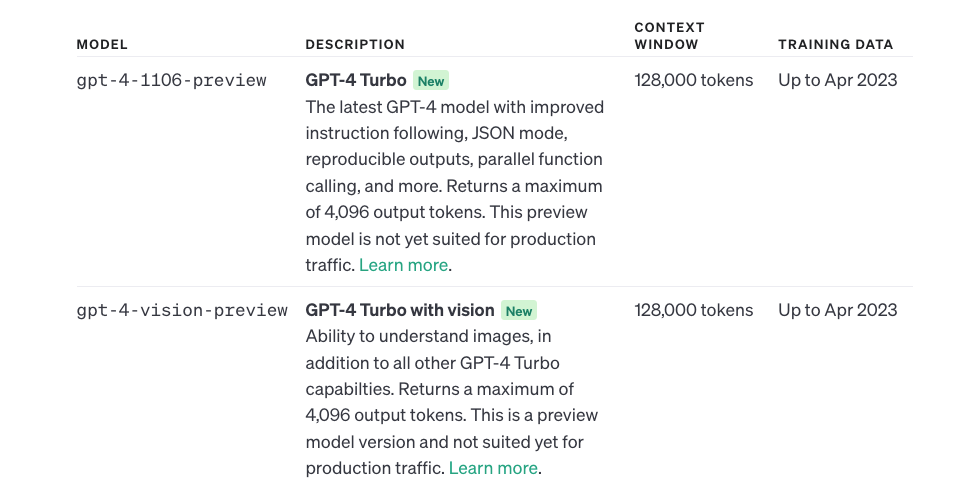

- 🚀 GPT-4 Turbo: The new GPT-4 Turbo model now supports a 128K context window...aka you can feed it a 300 page book as context.

- 🛠️ Assistants API: The new Assistants API provides access to three types of tools: Code Interpreter, Retrieval, and Function calling.

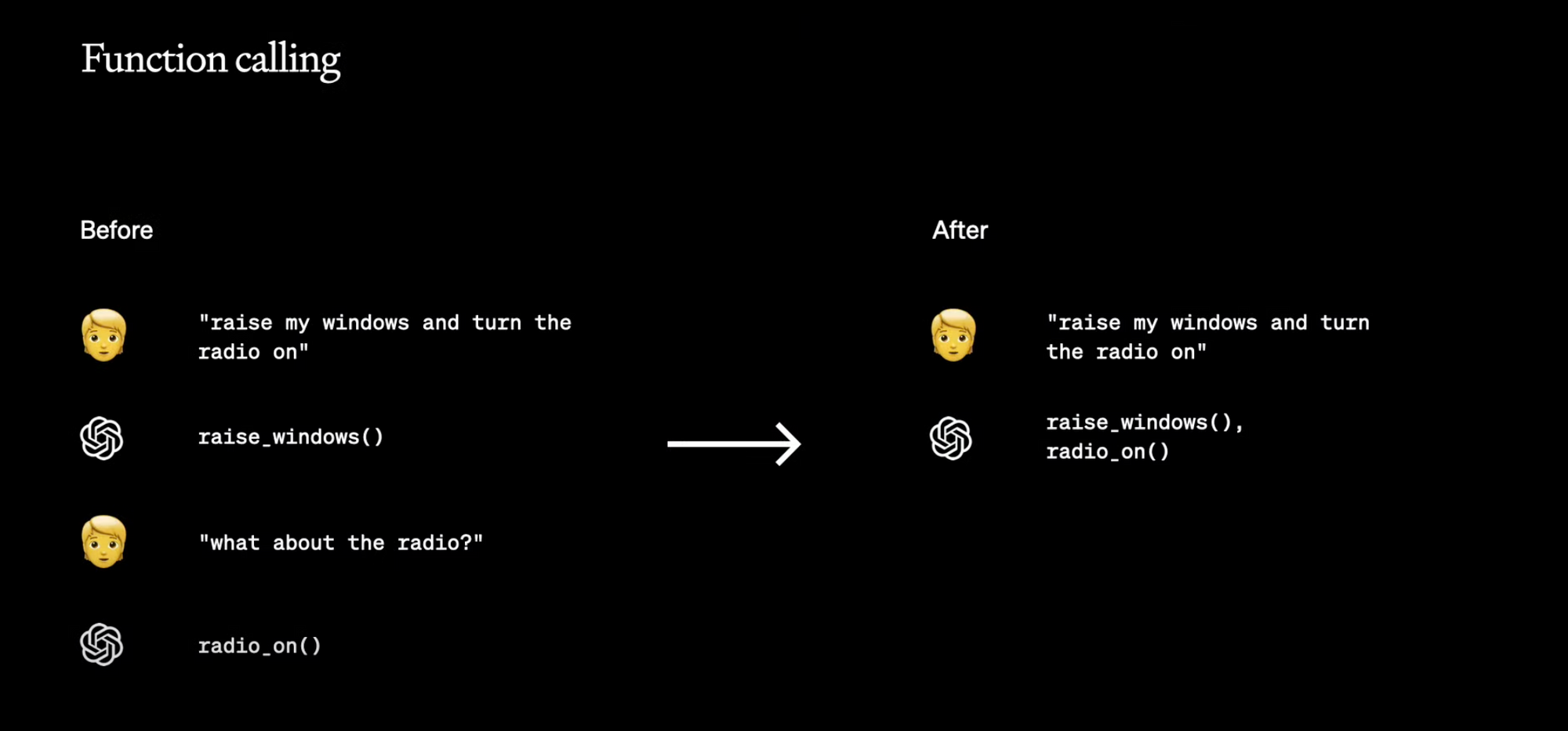

- ♻️ Parallel function calling: They have enabled multiple function calling, allowing you to essentially build AI that solve accomplish multiple tasks in a single prompt.

- 👀 Vision: GPT-4 Turbo now accepts images as inputs, useful for generating captions, analyzing images in detail, etc.

- 🖌️ DALL·E 3: is now available in the API and enables image & design generation designs programmatically.

- 🗣️ Text-to-Speech (TTS): The new TTS model offers human-quality speech from text with six available voices.

- 🔬 Fine tuning: GPT-3.5 16k fine tuning and GPT-4 experimental access and Model Customization are introduced.

- 💸 Pricing & rate limits: Lower prices & higher rate limits across the platform are available for developers.

Below we'll discuss each of these in a bit more detail, or you can check out the keynote below:

GPT-4 Turbo with 128K Context

First off, Sam Altman introduced their new model GPT-4 Turbo, which now has a 128k context window.

That's 300 pages of a book, 16 times longer than our current 8k context. - Sam Altman

In addition to length, the new model is said to be much more accurate over a long length. From personal experience, I have definitely noticed that if you push the limits of the current 8k window, you almost always lose some details and accuracy...so let's hope that increased accuracy true.

Also, this new model has training data up to April 2023 with continuous upgrades...so no more annoying 2021 cutoff date.

Parallel Function Calling

This is a big one in my opinion.

I've been working with function calling quite frequently for the past several months, and experienced first hand the issues of single function calls. Essentially, before if you'd ask a question that requires multiple API calls to answer correctly, it would fail.

For example, I've been developing a GPT-4 enabled financial analyst with access to real-time stock market data. With the previous single-step function calling, if you ask it what the net profit in Q3 2023 for Tesla and how does that compare to the ROE ratio...that requires two API calls: one for the income statements and another for the financial ratios. Of course, the user isn't always going to know these limitations, so they might be disappointed if you only answer one of two question.

Now, instead of having the user the ask follow ups for request they already asked..now you can just make multiple function calls in a single request.

Essentially, parallel function calling will be a key aspect of building AI agents going forward.

Assistants API, Knowledge Retrieval, & Code Interpreter

This was also a massive update...no more having to use multiple third-party or open source solutions for building AI agents.

An assistant is a purpose-built AI that has specific instructions, leverages extra knowledge, and can call models and tools to perform tasks.

Without being too hyperbolic, this update will surely bring a new wave of AI startups. How?

The Assistants API provides access to three key tools:

- Retrieval: You can bring knowledge from outside documents or databases into whatever you're building:

This means you don’t need to compute and store embeddings for your documents, or implement chunking and search algorithms.

- Code interpreter: This allows you to write and run Python in a sanboxed environment, meaning you can generate graphs, charts, solve math problems, and much more.

- Function calling: This opens assistants up to any function or external API you want to access.

While everyone is focused on the new GPTs and GPT Store (discussed below), in my opinion, the Assistants API was the biggest announcement from the event.

As Altman said about AI agents:

The upsides of this are going to be tremendous.

OpenAI Introduces GPTs

As Altman highlights, these capabilities of building AI assistants are not just available to developers...you can now build "GPTs" with natural language.

What are GPTs?

GPTs are tailored versions of ChatGPT for a specific purpose.

You can build a customized version of ChatGPT for almost anything, with instructions, expanded knowledge and actions, and you can publish it for others to use.

You can in effect, program a GPT with language just by talking to it.

Here's a summary of the new GPTs:

- GPTs are custom versions of ChatGPT that are tailored to specific purposes.

- These GPTs can assist in various tasks including learning board games rules, teaching kids math, and designing stickers without any coding knowledge.

- PTs can be easily created including providing instructions, imparting extra knowledge, and defining its functionality.

- Notable examples of GPTs already available for ChatGPT Plus and Enterprise users include Canva and Zapier AI Actions.

- OpenAI will launch a GPT Store for sharing GPTs publicly and rewarding the most used ones.

- Developers can connect external data such as databases, emails, e-commerce orders, etc to these GPTs, thus granting them real-world interaction capacity.

- Enterprise customers can deploy internal-only GPTs that suit their specific needs, with Amgen, Bain, and Square already making use of such opportunities.

- Lastly, ChatGPT Plus has been updated and simplified to include all the features in one place, now being able to search through PDFs and other document types.

You can learn more about GPTs below:

Build GPTs with Natural Language

OpenAI knows that not everyone can code, so they've made this accessible to build with natural language. Below you can find a clip of Altman building a GPT in a few minutes:

The GPT Store

Later this month, we're going to launch GPT Store. You can list a GPT there, and we'll be able to feature the best and most popular GPTs.

GPT Revenue Sharing

We're going to pay people who develop the most useful and the most used GPTs a portion of our revenue.

More information on the GPT store coming soon.

New Modalities: DALLE-3, Vision, and Text-To-Speech

As many expected, DALLE-3, GPT-4 with Vision, and the new text-to-speech model are all now available in the API.

GPT-4 Turbo can now accept images as inputs via the API.

With our new text-to-speech model, you'll be able to generate natural sounding audio from text in the API with 6 pre-set voices to choose from.

This unlocks a lot of use cases like language learning, voice assistants, and much more. You can check out an example of the voice assistants in the documentation here.

Fine Tuning & Customization

As Altman highlighted, they've made a few improvements to fine-tuning, with GPT-4 fine tuning coming soon.

Fine tuning has been working really well for GPT 3.5 Turbo...starting to today, we're going to expand that to the 16k version of the model.

We're also inviting active fine tuning users to apply for the GPT-4 fine-tuning, experimental access program.

Will certainly be applying to that, stay tuned.

Custom Models for Enterprise

In addition, for a select few companies with big budgets, you can now build a completely custom model with OpenAI...

You may want a model to learn a completely new knowledge domain or to use a lot of proprietary data...

With custom models, our researchers will work closely with the company to make a great custom model, especially for them, ad their use with our tools. This includes updating every step of the model training process.

Reproducible Outputs

Another developer-focused feature they introduced is a new seed parameter that can be used for reproducible outputs.

You can pass a seed parameter, and it will make the model consistent outputs. This, of course, gives you a higher degree of control over model behaviour.

Use cases of the new seed parameter include:

- Replaying requests for debugging

- Writing comprehensive unit tests

- Generally having a higher degree of control over model behaviour

More Control Over Responses

Next up, this one is more applicable to developers, but they introduced a new JSON mode.

We've heard loud and clear that developers need more control over the models responses and outputs.

Here's how they've addressed this JSON Mode:

- It ensures the model will respond in valid JSON

- This makes calling APIs much easier

GPT-4 Turbo Pricing

GPT-4 Turbo is considerably cheaper than GPT-4 by a factor of 3x for prompt tokens and 2x for completion tokens.

Also, they've reduced the cost of GPT 3.5 Turbo, so now the cost of the GPT 3.5 Turbo 16k model is cheaper than the previous 3.5 Turbo model.

ChatGPT Updates

Last but not least, of course ChatGPT now uses GPT-4 Turbo with all the latest improvements, latest knowledge cutoff, etc.

It can now browse the web when it needs to, write and run code, analyze data, take and generate images, and much more.

Model picking is gone...starting today. You will not have to use the dropdown menu, all of this will just work together.

Summary: OpenAI DevDay Highlights

As expected, OpenAI did not disappoint in their first dev day. Personally, I will be experimenting with all these new API features over the coming days, weeks...and months, so stay tuned for more in-depth tutorials on all these features.

In case you skimmed, here's a summary of the highlights:

- 🚀 GPT-4 Turbo: It now supports a 128K context window, enabling large amounts of text as context.

- 🛠️ Assistants API: It provides access to tools such as Code Interpreter, Retrieval, and Function calling.

- ♻️ Parallel function calling: This allows multiple function calling for accomplishing multiple tasks.

- 👀 Vision: GPT-4 Turbo now accepts images as inputs, supporting caption generation and detailed image analysis.

- 🗣️ Text-to-Speech (TTS): The new TTS model offers human-like speech from text with six available voices.

- 💰 Pricing & rate limits: Lower prices and higher rate limits across the platform for developers.

- 🏢 GPTs: Customized versions of ChatGPT that can assist in various tasks; GPTs can be shared on the new GPT store, with most used ones rewarded.

- 👁️🗨️ New Modalities: DALLE-3, Vision, and Text-To-Speech are now available in the API.