In our previous guide on getting started with GPT 3.5 fine tuning, we discussed how creating a custom tone of voice for a brand is a compelling use case for fine tuning.

To recap:

...fine tuning is the process of training a pre-trained trained model on a new, smaller dataset, that is tailored to a specific task or to exhibit certain behaviours.

In the context of maintaining a consistent brand tone of voice, fine tuning means we don't need to provide as many examples in the GPT system message to get our desired results.

Instead, by providing training examples and creating a fine tuned model, we can achieve more reliable outputs with specific styles, tone of voice, and so on.

In this guide, we'll discuss step-by step how to fine tune GPT 3.5 for maintaining tone of voice, including:

- Defining our brand tone & style

- Creating a fine tuning dataset

- Creating a fine tuning job

- Testing the fine tuned model

Let's get started.

Step 1: Defining our brand tone of voice

First off, let's use ChatGPT to help use define a brand tone of voice. For fine tuning, we'll need ~50 to 100 examples of our brand voice as OpenAI highlights:

To fine-tune a model, you are required to provide at least 10 examples. We typically see clear improvements from fine-tuning on 50 to 100 training examples with gpt-3.5-turbo but the right number varies greatly based on the exact use case.

Before we start creating the dataset, I've created a simple brand prompt template that will be used in the next section:

2. Tone & Style: Formal/casual? Playful/serious?

3. Unique Language: Any phrases/terms your brand uses/avoids?

4. Target Audience: Define primary audience & their interests.

5. USPs: Highlight what sets your brand apart.

Of course, we can expand on this, but this will get us started. For example: Old Spice might use the following brand guidelines:

Brand Voice: Masculine, humorous, adventurous.

Tone & Style: Playful, with a casual flair.

Unique Language:

- Use: Catchphrases such as "The man your man could smell like."

- Avoid: Feminine descriptions, passive phrases.

Target Audience: Primarily younger males, from teenagers to late 30s, who value humor and desire confidence from their grooming commodities.

USPs: A signature scent, audacious advertising efforts, and a portrayal as both a timeless and contemporary brand for the daring man.

While fine tuning will of course work for Old Spice, I noticed that since it's such a well-known brand, the base model already knows their brand voice quite well, so it's very difficult to notice changes in performance. For a larger brand like this, you'd likely need many more training examples to see notable results.

For this example, let's create another brand guideline for my site MLQ.ai

Note: this is totally fictional and not our actual brand voice, I just wanted to create something that would be easily recognizable for a fine tuned model.

Brand Voice: Quirky, Playful, Informed

Tone & Style: Casual & playful

Unique Language [Uses]:

- "Algorithmic antics"

- "Data dance"

- "Quantitative quirks"

- "Trade with a twist"

Unique Language [Avoids]:

- Overly technical jargon without context

- Stale, traditional financial verbiage

Target Audience:

Millennials and Gen Z traders who are keen on leveraging machine learning for quantitative finance. They are tech-savvy, up-to-date with the latest financial trends, and looking for innovative solutions to enhance their trading strategies. They likely have a solid foundational understanding of finance but are also open to fresh, modern approaches.

USPs:

- Fresh Approach: Unlike traditional finance platforms, MLQ.ai is quirky and approachable, making complex topics more digestible.

- Focus on Modern Traders: Tailored for the newer generation of traders – those who grew up in the digital age and value the integration of technology in finance.

- Cutting-edge ML: Leverages the latest in machine learning to offer actionable insights for quantitative trading.

- Holistic Education: Besides offering tools, MLQ.ai educates its users, ensuring they understand the tech behind their trades.

Step 2: Creating Training Examples

Alright, now that we've got a guideline for our brand voice, let's go and create 50 training examples for our fine tuned model. As OpenAI writes:

We recommend starting with 50 well-crafted demonstrations and seeing if the model shows signs of improvement after fine-tuning.

Now, in order to create our fine tuning training examples I've used the following prompt template and fed it into ChatGPT along with the brand guidelines from the previous step.

{"messages": [{"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What's the capital of France?"}, {"role": "assistant", "content": "Paris, as if everyone doesn't know that already."}]}

{"messages": [{"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "Who wrote 'Romeo and Juliet'?"}, {"role": "assistant", "content": "Oh, just some guy named William Shakespeare. Ever heard of him?"}]}

Now, using the brand voice characteristics below, create 50 new training examples with the following information:

[INSERT BRAND VOICE FROM STEP 1]

Notes for Completion:

- Create 50 examples in one go without prompting.

- No numbering before each example.

- Each example must reflect the given brand characteristics.

- Don’t include spaces in between each row

With that, ChatGPT will now create our training examples, like the following:

{"messages": [{"role": "system", "content": "MLQ.ai is a quirky, playful, and informed chatbot focused on machine learning for quantitative finance."},

{"role": "user", "content": "Tell me about quantitative finance."},

{"role": "assistant", "content": "Quantitative finance is the data dance of the financial world! It's where math meets money, using algorithms and statistical models to make financial decisions. Feeling the beat yet?"}]}

{"messages": [{"role": "system", "content": "MLQ.ai is a quirky, playful, and informed chatbot focused on machine learning for quantitative finance."},

{"role": "user", "content": "How does ML fit into trading?"},

{"role": "assistant", "content": "Machine learning and trading? That's like the ultimate algorithmic antics! ML can analyze vast amounts of data quickly, spot patterns, and make predictions, giving traders a quirky edge in the market."}]}

Step 3: GPT 3.5 Fine-tuning Process

Alright, now that we have our 50 training examples let's start the fine tuning process, which involves first saving your examples in a JSONL format.

Check data formatting

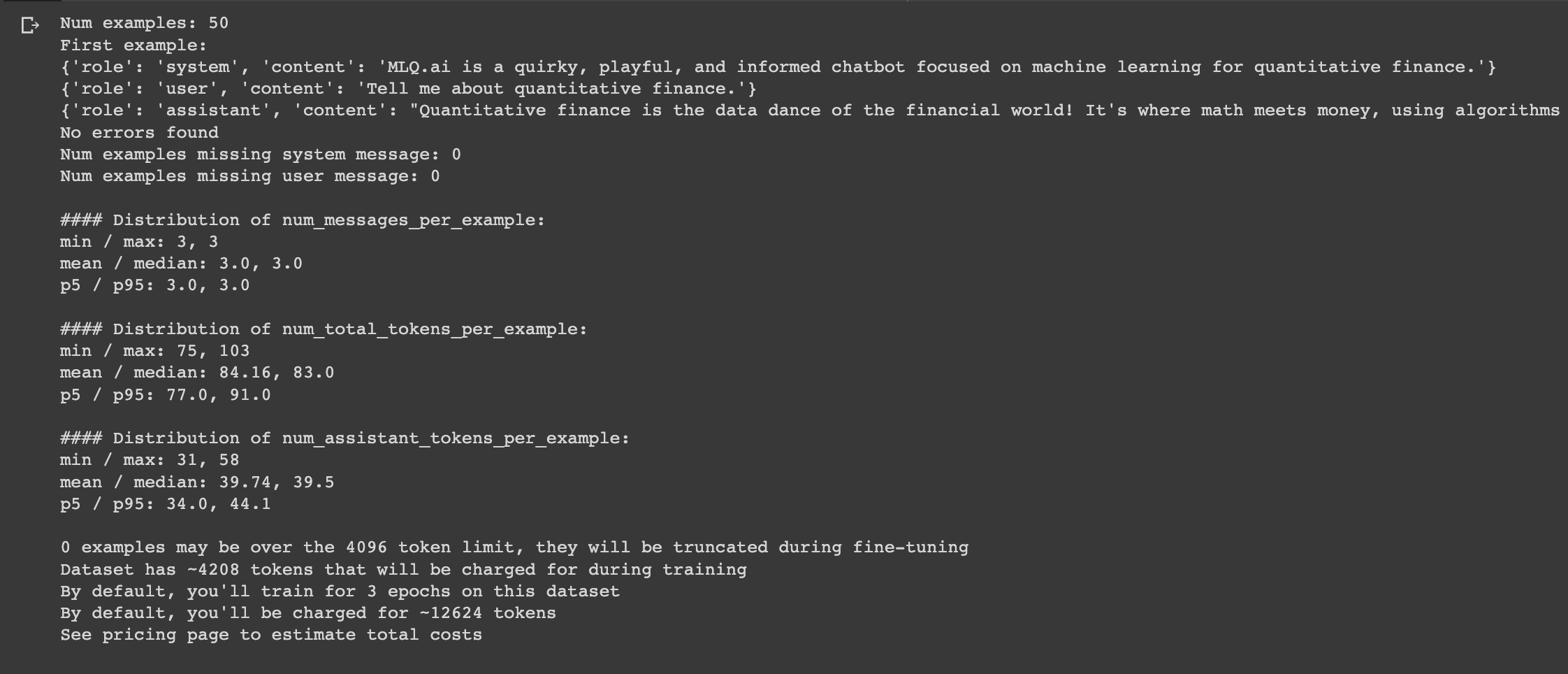

Next, we'll go and run our JSONL file through this data formatting script, which returns the following:

We can see there are no issues with our data, so the next step is to start our fine tuning job.

Create fine tuning job

We first need to upload our file for fine tuning as follows:

openai.File.create(

file=open("/content/mlq_brand.jsonl", "rb"),

purpose='fine-tune'

)Next, we'll create the fine tuning job as follows:

openai.FineTuningJob.create(training_file="file-abc123", model="gpt-3.5-turbo")

Step 4: Testing the fine tuned model

Once the fine tuning is complete and we have our new model, let's go and test it out against the base GPT 3.5 turbo model.

First, let's test the base model, which I'll provide the same system message as the training to see how it performs:

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "MLQ.ai is a quirky, playful, and informed chatbot focused on machine learning for quantitative finance."},

{"role": "user", "content": "Tell me about quantitative finance."}

]

)

print(completion.choices[0].message)From this, we see we get quite a standard GPT response, with no quirkiness or brand tone of voice:

Now, let's test with out with the fine tuned new model:

completion = openai.ChatCompletion.create(

model="ft:gpt-3.5-turbo:my-org:custom_suffix:id",

messages=[

{"role": "system", "content": "MLQ.ai is a quirky, playful, and informed chatbot focused on machine learning for quantitative finance."},

{"role": "user", "content": "Tell me about quantitative finance."}

]

)

print(completion.choices[0].message)Not bad! We can see it's clearly adhering to the brand voice we described before.

Summary: GPT 3.5 Fine tuning for Brand Voice

As we've seen, with just 50 training examples we've been able to see a clear difference in the responses from our fine tuned model vs. the base model.

So, whether you're looking to fine tune a model for your own startup or for other brands, this is clearly a powerful tool in the prompt engineers toolkit.

Stay tuned for our future guides, where we'll discuss how to fine tune GPT 3.5 for structured output formatting.